Artificial intelligence is advancing rapidly, enhancing our digital and creative lives every day. Yet, accurately generating human hands remains a persistent challenge. This article explores the intricate anatomy of hands, the limitations of AI’s learning processes, and the common issue of AI producing hands with fewer fingers. By understanding these obstacles and recent advancements from leading AI models and organizations, we can better collaborate with AI, harness its potential, and overcome its current limitations.

Artificial intelligence (AI) is evolving every day, bringing incredible advancements to how we create and interact with digital content. Giving us stunning visuals and innovative solutions, AI tools are becoming more sophisticated, seamlessly integrating into our creative and everyday lives. But amidst all these breakthroughs, there's one quirky challenge that keeps popping up: getting human hands right. Understanding why AI often misses the mark with hands isn't just a neat technical puzzle—it’s a window into how AI works and how we can better collaborate with it. By exploring this unfortunate quirk, we can unlock deeper insights into AI's strengths and limitations, making it a more trustworthy and effective tool in our arsenal.

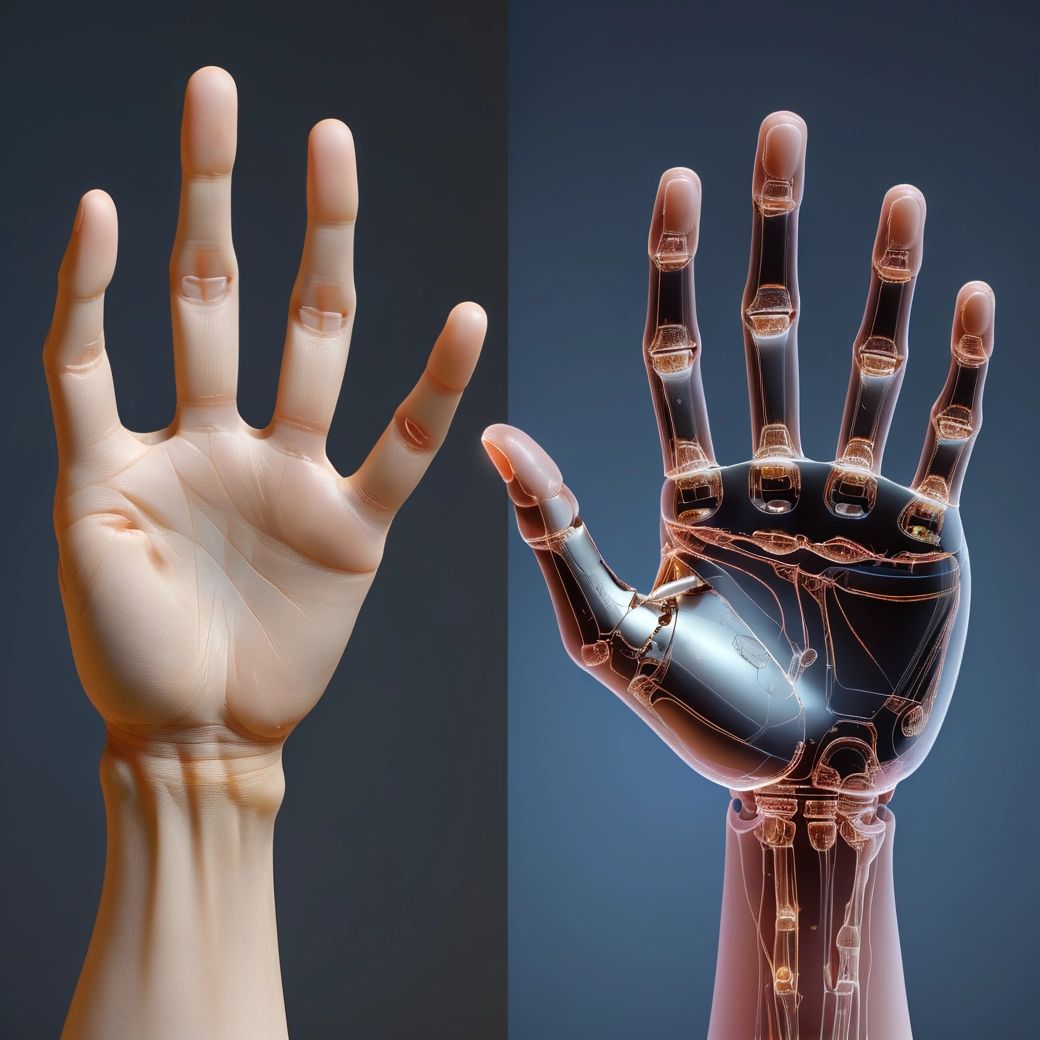

The Complexity of Human Hands

Think about your hands for a second. They’re these amazing tools that let you do everything from typing an email to crafting a piece of art. With 27 bones, countless joints, and a mind-boggling range of motion, hands are a marvel of biological engineering. It’s no wonder AI finds them tricky to replicate accurately. This intricate complexity is the first big hurdle for generative AI.

Biomechanical Intricacy

Our hands are designed for incredible versatility. Each finger moves independently, and the opposable thumb adds that extra bit of dexterity. For AI, understanding these nuanced movements and relationships is a tough challenge. Instead of smooth, natural poses, you might end up with hands that look a bit... off. Sometimes, they can even be downright unsettling or frankly disturbing to see. It’s like trying to teach a robot the subtle dance of your fingers—challenging but utterly fascinating.

Variability in Hand Shapes and Sizes

No two hands are exactly alike. They come in different sizes, shapes, and proportions, making the AI’s job even harder[^5]. Imagine trying to draw hands that fit everyone perfectly—it’s a moving target. This diversity means AI has to generalize from a vast array of examples, and sometimes it just doesn’t get it right.

The AI Learning Process

To really get why AI struggles with hands, let’s peek under the hood and see how these models learn and generate images.

Limited Understanding of 3D Structure

Most AI image generators work with 2D data, which means they don’t truly understand the three-dimensional nature of hands. Professor Peter Bentley from University College London puts it well: "These are 2D image generators that have absolutely no concept of the three-dimensional geometry of something like a hand"[^6]. Without this depth, rendering hands from different angles can lead to some pretty wonky results.

Pattern Recognition vs. Anatomical Knowledge

AI is great at spotting patterns in huge datasets of images, but it doesn’t "know" anatomy the way we do. It might see that fingers are usually grouped together, but it doesn’t inherently understand that a hand should have exactly five digits—four fingers and a thumb. This gap means that while the AI can mimic the general look of a hand, it often misses the finer details that make it look natural.

The Three-Finger Phenomenon

One of the most common quirks in AI-generated images, one that completely freaks me out every time it I see it, is hands with three fingers and a thumb instead of the usual four fingers and a thumb. Let’s break down why this happens:

Data Bias and Representation

AI learns from the images it’s fed, and if many of those images don’t clearly show all five digits, the AI might think fewer fingers are the norm. Think of it as learning to draw hands from blurry photos—it’s easier to guess than to get every detail right.

Simplification of Complex Structures

Sometimes, AI tries to simplify things to make sense of complex structures. In the case of hands, this can mean reducing the number of fingers just to create something hand-like. It’s prioritizing recognition over accuracy, which can lead to some unexpected finger counts.

Pattern Overgeneralization

If the AI often sees hands with only three fingers visible—maybe because of certain poses or angles—it might start overgeneralizing that as the standard. This overreach means it’s replicating incomplete hand structures more frequently than it should.

Challenges in Training AI for Hand Generation

Training AI to nail hand generation is no small feat. Here are some of the main challenges:

Insufficient Hand-Focused Data

Good training data is key, and unfortunately, many AI datasets don’t have enough clear, detailed images of hands in all their glory. A spokesperson from Stability AI mentioned, "within AI datasets, human images display hands less visibly than they do faces". Without plenty of quality examples, the AI struggles to learn what accurate hands look like.

Complexity of Hand Movements

Hands can move in so many ways, making it tough to cover every possible pose in training data. This variety means there are gaps in what the AI has seen, leading to difficulties in generating hands that can adapt to different contexts realistically.

Low Margin for Error

Hands don’t forgive mistakes easily. Even a slight tweak in finger length or position can make a hand look unnatural or just plain weird. Unlike other body parts where minor errors might go unnoticed, hands are closely scrutinized, making precision a must.

Recent Advancements and Future Prospects

Despite these challenges, AI is making steady progress in getting hands right. Here’s what’s happening:

Focused Training and Dataset Improvements

AI companies are honing in on the hand problem by refining their datasets. They’re prioritizing clearer images of hands and sidelining those where hands are hidden or partially visible. This targeted approach helps the AI learn better representations of hands, reducing those pesky inaccuracies.

Increased Model Complexity

Some of the most cutting-edge AI models out there—like Google’s Parti, OpenAI’s DALL-E 3, and Stability AI’s latest creations—are really starting to master the art of drawing something as intricate as human hands. Meanwhile, NVIDIA is diving deep into generative adversarial networks (GANs), and Meta is making exciting strides with AI-driven image synthesis. These innovative models are getting a much better handle on the tiny details and subtle variations that make hands look so natural and lifelike. It’s fascinating to see how they’re pushing the boundaries of what generative AI can achieve, bringing us closer to creating images that feel truly real.

Continuous Iteration and Improvement

AI isn’t standing still, and neither is hand generation. Continuous updates and refinements are pushing the boundaries of what’s possible. For example, Midjourney rolled out an update in March 2023 specifically aimed at enhancing hand realism. These ongoing improvements mean that the AI is steadily overcoming its earlier limitations.

Closing Words

Generating accurate human hands remains one of the more intriguing challenges in AI image generation. It’s a testament to the complexities involved in teaching machines to mimic our intricate biology. But as AI technology marches forward, we can expect hands to look more natural and precise, shedding this quirky flaw of early generative models.

Understanding these current limitations isn’t just about fixing a technical glitch—it’s about appreciating the depth of AI and how it processes the world. By bridging the gap between machine perception and our own intricate reality, we can embrace AI as a beautiful and effective tool. This deeper understanding helps us work alongside AI with confidence, leveraging its strengths while being mindful of its quirks, ultimately fostering a harmonious and productive relationship with this powerful technology.

Sources

- Why Do AI-Generated Hands Look So Bad?

- Why AI Can’t Draw Human Hands: The Role of Image Annotation and ML Models Training in Generative AI

- Why Does AI Draw the Wrong Number of Fingers on a Hand?

- AI Image Generators Finally Figured Out Hands

- Generative AI Models Fail at Creating Human Hands

- Why AI-Generated Hands Are the Stuff of Nightmares, Explained by a Scientist

- Why Does AI Art Screw Up Hands and Fingers?

- The Real Reason AI Art Tools Can’t Create Hands or Feet

- ELI5: Why Does AI-Generated Artwork Have